Fuzzer-V

TL;DR

An overview of a fuzzing project targeting the Hyper-V VSPs using Intel Processor Trace (IPT) for code coverage guided fuzzing, built upon WinAFL, winipt, HAFL1, and Microsoft’s IPT.sys.

Introduction

One of our habits at CyberArk Labs is to choose targets that are difficult to fuzz, and fuzz them anyway. For example, read this blog post on fuzzing RDP and this talk on fuzzing Windows Drivers. Now in this blog, we targeted the kernel components of Hyper-V. Hyper-V is Microsoft’s hypervisor and lies at the core of Azure. As such, it is of crucial importance and any vulnerability in it puts millions of customers at risk. With this blog and our open source repository , we hope to equip the reader with an easy path to fuzzing Hyper-V, to audit current cybersecurity efforts.

As of now, we were unable to find new vulnerabilities using Fuzzer-V. However, we verified its value on several test cases, and we believe that it is mature enough to share with the community.

Hyper-V Architecture

Microsoft has some great documentation on Hyper-V (example here). Here is what’s relevant to us:

- Hyper-V consists of the hypervisor, whose responsibility is to split the machine into partitions, and manage the interactions between them. There is always one special partition running Windows, called the root partition, also referred to as the Host. All other partitions are called child partitions, also referred to as virtual machines (VMs).

- VMs do not have direct access to the hardware, rather they see a “virtualized reality” where the hardware they interact with is emulated by the root partition or hypervisor. Child partitions can be enlightened (aka para-virtualized), meaning they know they are running as a VM, or unenlightened if they are not aware. Enlightened child partitions have modified operating systems that can take advantage of their knowledge by making hypercalls to interact with the hypervisor, and by sending messages over a VMBUS channel to interact directly with the root partition.

- To interact directly with the root partition, Hyper-V must implement a virtual service provider (VSP), and the child partition with a corresponding virtual service client (VSC). When the VM starts, the root partition will offer VMB channels for all supported VSPs and the child partition will accept if it has the VSC counterpart.

Attack Surface and Previous Works

The holy grail, from an attacker standpoint, is a VM escape, which is a given code execution on the VM and a reach code execution on the Host. The attack surface for a VM escape in Hyper-V is limited. Unless optional features are configured (RDP connection features, files sharing, etc.), it consists of cases where the VM needs to access the physical world (internet, host storage, etc.). In every such case, the host is responsible for emulating the outside resource – translating the perceived resource from the VM point of view to the real-world resource, and vice versa. The emulation occurs in three places, which we focus on as the main attack surfaces:

- VMWP.exe – The VM worker process, a process on the host that is called whenever a VM trap is reached, i.e. when the hypervisor identifies a sensitive operation performed by the VM and lets the host emulate the command on behalf of the VM. For example, a function in VMWP is called whenever the VM uses the out command to write to a port.

- Hypercall callbacks – Callbacks in the hypervisor itself are called when the VM performs a hypercall. A list of hypercalls is available here. Note that some hypercalls are only available from the root partition and are therefore less interesting from an attacker point of view.

- VSP – Drivers on the host that expose a virtual service to the VM. For instance, the network VSP is called vmswitch . Its VSC counterpart is netvsc .

Attack surface #1 was studied by Microsoft, see examples here,here and this fuzzing setup. Attack surface #3 was also studied by Microsoft, see here and here.

We chose to focus on attack surface #3. Our starting point was the hAFL 1 and hAFL 2 fuzzing setups. We are currently unaware of public research into attack surface #2.

Let’s Go!

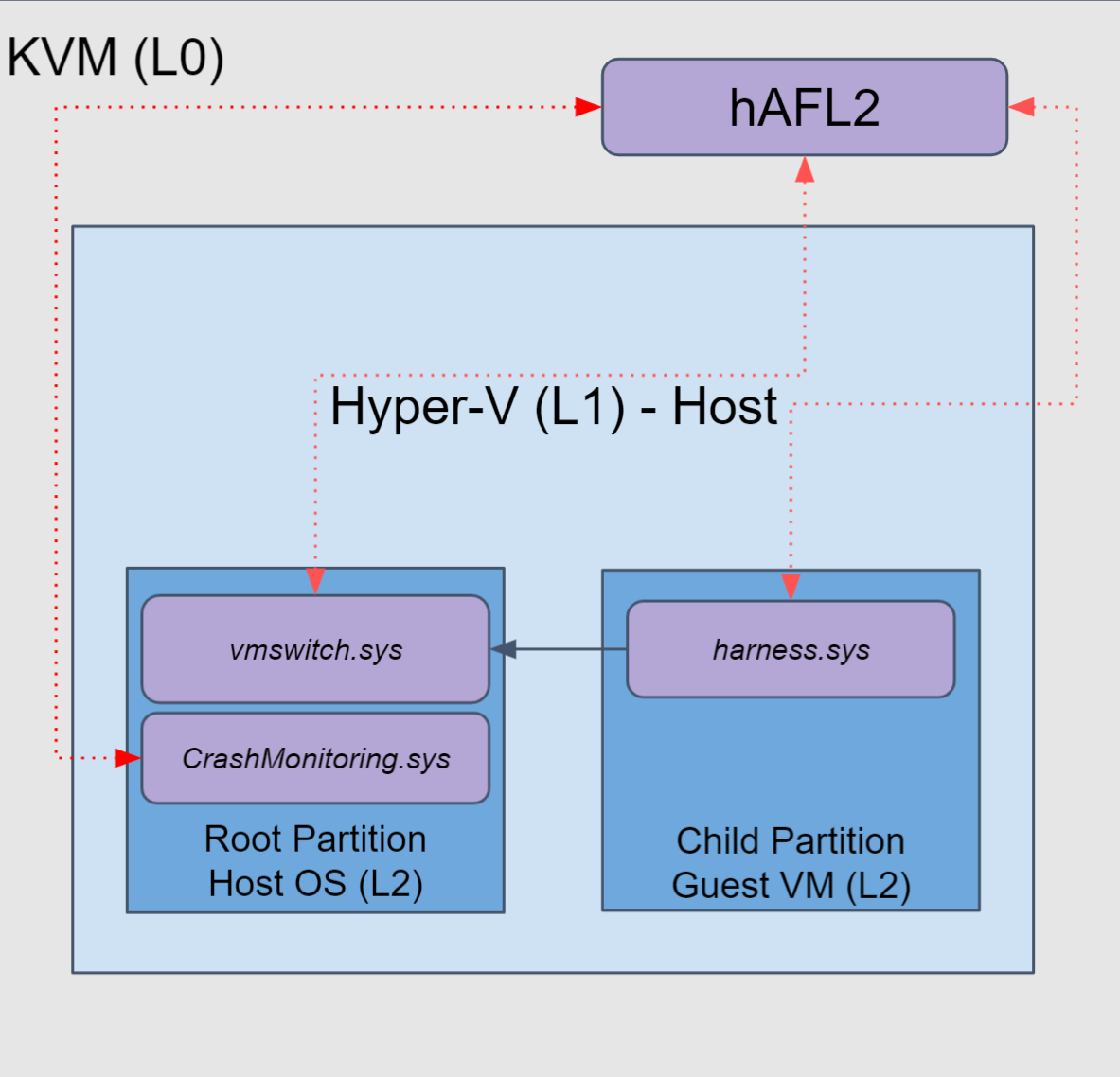

Going into this research, our plan was to use hAFL2 (Figure 1), which uses nested virtualization. It runs Linux on the physical machine (Layer 0), a KVM VM running Hyper-V under it (Layer 1), and the root and child partitions under Hyper-V (Layer 2). The target of the fuzzer is a callback to a VSP in the root partition in Layer 2, that is called whenever a message is sent on the VMBUS channel. The setup is the following:

- The fuzzer runs in Layer 0. It generates inputs to be sent to the target function and transfers them to the child partition in Layer 2 using a KVM hypercall.

- The harness runs in the child partition in Layer 2. It receives the inputs and sends them to the root partition via the VMBUS channel.

- The target function runs in the root partition in Layer 2. It is monitored using IPT.

- The fuzzer reads the IPT packets directly for code coverage.

Figure 1: hAFL2 setup

We implemented (really, modified) the necessary Windows components for Layer 2, however, despite tireless efforts we were unable to close the loop and read IPT packets in Layer 0.

If at First You Cannot Fuzz – Try, Try Again

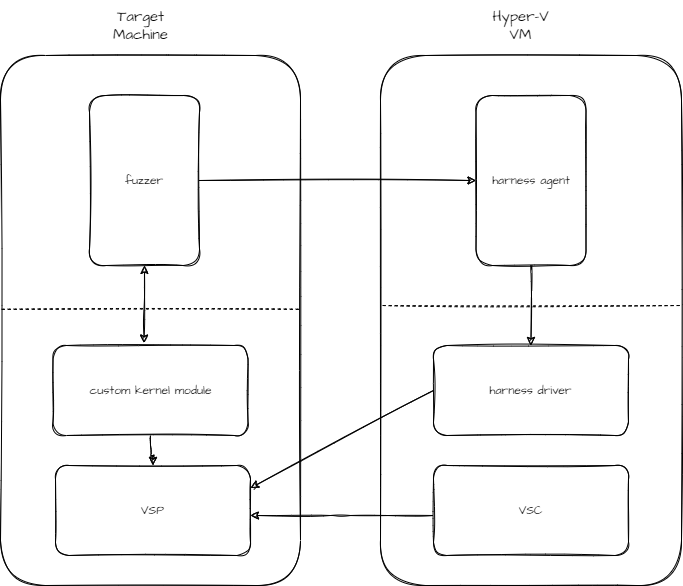

At this point, we had to either pivot or drop the research. We decided to give it another shot, implementing our own simpler* design (Figure 2). The basic idea was to abandon the Linux machine and run everything on the Windows side.

The New, Fuzzier Setup

- The fuzzer now runs on the target physical Windows machine . It generates inputs and sends them to a VM over the net (or a shared folder).

- The harness runs on the VM. It receives the inputs and sends them back to the target machine over the VMBUS channel.

- The target function is a callback on the target machine that processes messages on the VMBUS channel. It is hooked by a custom kernel module. When a message is received IPT is enabled for the current thread, the original function is called, IPT is disabled, and the IPT packet is reported back to the fuzzer.

We used packet tagging to differentiate fuzzer generated messages from “background noise” (Hyper-V doing its thing). For the interested reader, we recommend the technical explanations in our previous blog.

*This solution is simpler in that it only requires two machines to take part – the target machine and an auxiliary VM. This comes at the cost of the fuzzer running on the target machine, which makes parallelization more complicated (and indeed we have not implemented parallelization yet, essentially one would need to use a different marker for different instances of the fuzzer , and send the IPT packet accordingly).

Figure 2: Fuzzer-V setup

Nested virtualization and IPT

Our original plan was to run the target machine as a VM under Hyper-V with nested virtualization enabled. According to Microsoft’s documentation, it is possible to do this but unfortunately (as mentioned in the article), it is impossible to enable both IPT and nested virtualization at the same time. Hence, we resorted to using a physical machine as the target machine. This is clearly suboptimal since it means every crash, either found by the fuzzer or a bug in the custom kernel module, leads to a BSOD of the physical machine, and halts the fuzzing process.

Customizing WinAFL

For the fuzzer we chose to modify WinAFL to support our use case. There are many good fuzzers to start with, but we chose WinAFL for a few reasons:

- It is great!

- It already had support for IPT packet processing.

- We had previous experience modifying it for unusual targets.

We modified WinAFL to send the generated inputs through a custom DLL to the auxiliary VM and receive the IPT packets from our custom kernel module.

In order to obtain the full code coverage from an IPT packet, the fuzzer needs access to the full memory map of the target modules that are encountered in the trace. So, we let our custom kernel module expose the full memory map of all loaded kernel modules (do not try this at home – this is, by definition, a complete info leak!)

Messing with IPT

Microsoft implemented the IPT interaction in a kernel module named IPT.sys. It exposes some IOCTLs, allowing the user to manage its various options (see winipt for a user mode library that implements these IOCTLs). However, we needed the ability to turn IPT on and off for a single thread when running inside it (when it hits the target function with a marked message), which is not supported. To overcome that we reversed IPT.sys , and came up with the following solution:

- At startup, we enabled IPT for all all processes .

- At startup, we called the inner functions of IPT.sys to disable IPT for all threads in the traced processes.

- At runtime, we called the inner functions of IPT.sys to enable IPT for the thread running the target function.

*/Style CPP*/

// called at startup for (almost) all processes

VOID init_process_tracing(HANDLE pid)

{

HANDLE process_handle = open_process(pid);

// start tracing the process (internally sends an IOCTL to IPT.sys)

// inadvertently starts trace for all threads in the process

start_process_tracing(process_handle);

// stop trace on all threads of the target process

// internally runs IPT.sys function StopTrace on every thread of the process

stop_trace(FALSE, FALSE, TRUE, pid);

ZwClose(process_handle);

}

// called at startup disable tracing for all threads of the process

VOID stop_trace(..., BOOLEAN all, HANDLE pid)

{

PEX_RUNDOWN_REF ref = get_process_context(pid, TRUE);

if (ref != NULL)

{

if (all)

{

// call IPT.sys!StopTrace on all threads using IPT.sys!RunOnEachThread

pRunOnEachThread(ref[5].Count, (PVOID)pStopTrace, (PVOID)FALSE);

}

...

}

}

*/Style CPP*/

// this is the hook function of the target function

// it is called whenever a message is sent on the VMBCHANNEL

// both for fuzzer messages and regular messages

VOID processing_hook_function(VMBCHANNEL channel, VMBPACKETCOMPLETION packet, PVOID buffer, UINT32 size, UINT32 flags)

{

// check if this is our message

if (is_fuzz_message(buffer, size, &defer_to_passive) && !stop_processing)

{

// this is us

// check if the running process is traced by IPT

if (get_process_context(pid, FALSE) != NULL)

{

// process is traced, we are ON!

// start trace on the current thread

start_trace(FALSE, pid);

// call the target function

original_packet_processing_callback(channel, packet, buffer, size, flags);

// pause the trace and save to buffer

pause_trace();

save_current_trace();

// queue a worker to send the IPT packet to the fuzzer

IoQueueWorkItem(coverage_work_item, &coverage_work_item_routine, DelayedWorkQueue, (PVOID)pid);

}

}

}

// called at runtime to enable tracing for the currently running thread

VOID start_trace(BOOLEAN all, HANDLE pid)

{

PEX_RUNDOWN_REF ref = get_process_context(pid, TRUE);

if (ref != NULL)

{

if (all)

{

// call IPT.sys!StartTrace on all threads using IPT.sys!RunOnEachThread

pRunOnEachThread(ref[5].Count, (PVOID)pStartTrace, ref);

}

else

{

// call IPT.sys!StartTrace on the current thread

pStartTrace(ref);

}

}

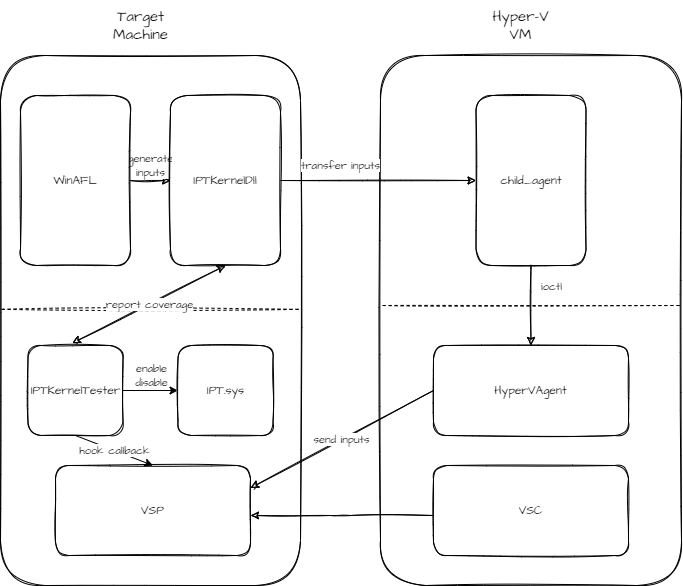

Complete setup

Figure3: Complete Setup

Our complete setup (Figure 3) consists of the modified WinAFL and IPTKernelDll (using the custom input processing feature) as the fuzzer, child_agent and HyperVAgent as the harness on the VM, and IPTKernelTester as the custom kernel module on the host.

Execution context

Our target function is a callback function, whose job is to process messages on a specific VMBUS channel. Messages are delivered to the callback function as a DPC. It is called at high IRQL (DISPATCH_LEVEL ). That means that whenever a message is sent on the VMBUS channel, the system interrupts some arbitrary thread and runs the callback function on it. The high IRQL means that the function cannot call a blocking function and must return quickly. The arbitrary thread context means that we cannot know in advance which thread (or process) will run the callback function. That causes several issues:

- Some operations are not allowed in DISPATCH_LEVEL . As a result, we could not enable IPT for a process at this point. This is the reason we had to do so at startup.

- We cannot know in advance which process will receive the DPC, so we had to enable IPT in advance for all processes.

- Some processes (most importantly the system process) are untraceable. To overcome that we would not trace these processes, and if we encounter one of them at runtime we would skip the call, and tell the fuzzer to try again with the same input, until a traceable process is reached. The following snippets show how we managed to do that.

*/Style CPP*/

// this is the hook function of the target function

// it is called whenever a message is sent on the VMBCHANNEL

// both for fuzzer messages and regular messages

VOID processing_hook_function(VMBCHANNEL channel, VMBPACKETCOMPLETION packet, PVOID buffer, UINT32 size, UINT32 flags)

{

// check if this is our message

if (is_fuzz_message(buffer, size, &defer_to_passive) && !stop_processing)

{

// this is us

// check if the running process is traced by IPT

if (get_process_context(pid, FALSE) == NULL)

{

// we do not trace the current process

// nothing to do but try again, but we must let the call through

original_packet_processing_callback(channel, packet, buffer, size, flags);

// queue a worker to tell the fuzzer to re-send

IoQueueWorkItem(coverage_work_item, &coverage_work_item_routine, DelayedWorkQueue, NULL);

}

}

}

}

*/Style CPP*/

// this function is called by WinAFL for every new input

// sends the input to the VM over the net and waits for an IPT packet from IPTKernelTester

// if the call to IPTKernelTester returned with no data, re-sends the input

IPT_KERNEL_API UCHAR APIENTRY dll_run_target_pt(char * buffer, long size, DWORD timeout, PVOID * trace)

{

UCHAR rc = 0;

BOOL collected = FALSE;

...

// until IPT data was returned: send the message and wait for data to be collected

while (!collected)

{

rc = send_message(local_buffer, size);

if (rc)

{

// failed to send message to the VM - try again

continue;

}

// get resulting IPT data from IPTKernelTester

collected_trace_size = collect_coverage(timeout, trace);

if (collected_trace_size > 0)

{

collected = TRUE;

}

else

{

// returned with no data (no coverage from this process) - try again

}

}

}

Resending messages when the process is not traced created an unusual phenomenon: if the CPU usage is low, most DPCs will dispatch to the system process itself, and therefore will not be traced – and need to be re-sent many times. However, if we just created noise in some other process (e.g. an infinite loop), most DPCs will dispatch to the noisy process and will be traced. So, counter-intuitively, our fuzzer was 10-20 times faster when the machine was busy.

How to Run the Fuzzer

Prerequisites

- Verify that your host runs Windows 11 version 10.0.22621.963 (other versions require some adjustments).

- Verify that your host supports IPT.

- Hyper-V VM running Windows 11 version 10.0.22621.963 (other versions require some adjustments).

Setup

- Disable driver signature verification.

- Clone this git repository with -recurse to clone WinAFL as well.

- Compile IPTKernelTester.sys, IPTKernelDll.dll, WinAFL, child_agent.exe, HyperVAgent.sys

- Copy IPTKernelTester.sys, IPTKernelDll.dll, WinAFL to the host.

- Copy child_agent.exe, HyperVAgent.sys to the VM.

- Run the copy_ip_to_vm.ps1 script as admin on the host.

Execution

- Run the child_starter.ps1 to activate the harness on the VM.

- Execute WinAFL on the host with this command.

.\afl-fuzz.exe -i in -o out -S 01 -P -t 30000 -l C:\IPTKernel\IPTKernelTester\IPTKernelDll.dll -- -nopersistent_trace -decoder full_ref -fuzz_iterations 1000000 -- test.exe

Test case

- Change the value of INSERT_CRASH to TRUE in IPTKernelTester.sys and recompile.

- Execute (as per the previous section).

- This should result in a blue screen from executing an exception at target_function + CRASH_OFFSET , showing what to expect if a bug is encountered by the fuzzer.

Handling system updates

Some values of the program are OS version specific and need to be manually updated at every system update. These consist of:

- Functions and global variables offsets defined in IPTKernelTester: channel.h, ipt.h, kernel_modules.h .

- VMBCHANNEL offsets defined in IPTKernelTester: channel.h and in HyperVAgent: channel.h

- GUID of the target channel defined in child_agent: child_agent.cpp

Finding the first value is straightforward: you only need to reverse the relevant driver and find the offset from the base address. For example, one can use the following command in WinDBG to find the offset of the StartTrace function inside IPT.sys

? ipt!StartTrace - ipt

For the second, one needs to debug a Hyper-V Host and VM (or crash them and debug the dump), to identify the data patterns in an active VMBUS channel. The starting point is vmbkmclr!KmclChannelList for the host, and vmbkmcl!KmclChannelList for the VM. These are linked list of VMBCHANNEL-s.

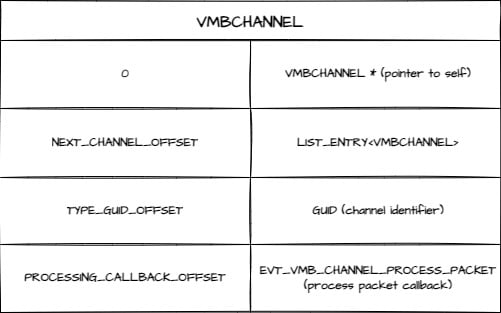

VMBCHANNEL is the structure that defines the VMBUS channel, and it may differ between the Host and the VM if they run different OS versions. The important offsets for our use case are the following:

- NEXT_CHANNEL_OFFSET_IN_VMBCHANNEL – offset of the linked list entry to traverse the list.

- TYPE_GUID_OFFSET_IN_VMBCHANNEL – offset of the GUID to identify the channel.

- PROCESSING_CALLBACK_IN_VMBCHANNEL– offset of the target function used to hook the channel.

- offsets of other callbacks to hook for logging.

While the offsets change between OS versions, they do not change a lot, so a good starting point is the previous offsets.

- Every VMBCHANNEL conveniently starts with a pointer to itself, so one can find NEXT_CHANNEL_OFFSET_IN_VMBCHANNEL by searching for an address pointing to itself close to *vmbkmclr!KmclChannelList – PREVIOUS NEXT_CHANNEL_OFFSET_IN_VMBCHANNEL .

- There are two consecutive GUIDs inside VMBCHANNEL , , so one can find TYPE_GUID_OFFSET_IN_VMBCHANNEL by searching for an address with consecutive “random” 0x20 bytes inside the otherwise very structured VMBCHANNEL . Note that we want the second of the GUIDs.

- VMBCHANNEL contains an array of callbacks, so one can find PROCESSING_CALLBACK_IN_VMBCHANNEL by searching for an array of function addresses. Note that not all callbacks need to appear in every channel. For example (Figure 4), not all channels are bi-directional, so the processing callback doesn’t always appear.

Figure 4: VMBCHANNEL offsets

Finally, we can use the callbacks to find the relevant channel, and find the GUID of the target channel using the TYPE_GUID_OFFSET_IN_VMBCHANNEL.

Conclusion and Next Steps

Up to this point, we were unable to find new vulnerabilities using Fuzzer-V. We intend to enhance it and target different VSPs as well.

While there are many technical details behind the scenes, Fuzzer-V is a simple solution to set up a Hyper-V fuzzer. It requires no modification of the host machine, making it simple to install and run.

Using and contributing

We encourage the community to use our code and ideas for further vulnerability research. We will greatly appreciate any contribution or suggestion to the project.